3.2 Algorithms

As indicated in the previous section, Google and all search engines function and exist because of algorithms. An algorithm is a set of instructions that helps something (a search engine) find an answer to a problem (an information need), some have described them as “computer programs designed to make predictions” (Brisson-Boivin & McAleese, 2021, p. 4). In an algorithm, a set of rules is followed in order. This can be very complex or seemingly simple, like a recipe or a math equation (Brisson-Boivin & McAleese, 2021). Internet companies develop and use algorithms to help users perform tasks online, but also to track, manipulate, make recommendations, and maximize a user’s time on a site (Sadagopan, 2019; Brisson-Boivin & McAleese, 2021; Saurwein & Spencer-Smith, 2021). Therefore, these rules are a set of decisions that are predetermined for a user, whose search behavior, in turn, decides (via the algorithm) what sort of advertisements and results they will see. In many cases this leads to unintended results for the users: some big, some small, some destructive, some harmless. Algorithms can make life easier, but they also limit a user’s exposure to vast amounts of information.

Concern about the control search engine companies have over information consumption is on the rise. Some governments are passing legislation that will require companies to explain what decisions their algorithms make. For example, in 2022 the Canadian Government proposed the Canadian Digital Charter Implementations Act which, if passed, will give citizens the right to request clarification on how companies apply automated ‘decision-making’ systems like algorithms and artificial intelligence to make recommendations or choose search paths and results (Gibbs & Castillo, 2021). This Act carries significant impact because algorithmic designs, and users’ lack of knowledge of them, can reinforce and exacerbate existing social inequalities. This is referred to as algorithmic bias (Brisson-Boivin & McAleese, 2021). Recall from chapter two that bias refers to the act of favoring one side over another.

3.2.1 Bias in Algorithms

Algorithmic rules are created by the people and the companies that program the system. Often programmers are unaware that they are including their personal biases into their coding. These individuals may favour a particular viewpoint, as everyone has their own biases. Those biases may be balanced and well-meaning, or they may promote injustices like racism and sexism (Noble, 2018). Because algorithms are privately owned by large corporations, it is extremely difficult to find out what or who influences their creation (Noble, 2018; Saurwein & Spencer-Smith, 2021). Studies have shown that at certain times throughout the history of search engines, the results generated by searching for certain topics have been very problematic. For example, researcher Safiya Umoja Noble realized in 2010 that a Google search for ‘black girls’ persistently retrieved explicit pornographic results. It wasn’t until the release of a new updated algorithm in 2012 that this offensive trend came to an end (Noble, 2018). Are there other examples? Test some searches to see if there are any hidden biases in the search algorithm.

3.2.2 Impact on Your Search

Let’s look at Google’s algorithmic rules to understand how bias can creep into search results. First, Google tries to guess the meaning of a search. For example, if a user types something in the form of a question “where is mercury?” versus “mercury” the results will be different. The algorithm splits words apart and searches them individually. So, for the above searches, the question might produce information about the planet mercury and its location in the universe or the element mercury and where it is placed in a thermometer. For the second search, Google would not search for location but for definitions of mercury. In this case, the algorithm is considering search history to try and guess what the searcher is looking for. If they had done previous searches on planets in the solar system, Google will probably assume they are looking for the planet not the element (Google, 2019a). This is quite harmless if people take the time to review their results and continue to research for the best information.

How does Google define relevant? It does this based on two things: the keywords used–it searches for the keywords that match the search (especially in webpage titles); second, the newest sites–it selects the webpages most recently published or updated based on the topic. The highest ranked website (whichever the algorithm deems most important) appears at the top of the results list. Google also attempts to identify websites that it considers to be the most reliable resource on a topic–it does this based on how many people have visited the site. So, the website with the most visitors is considered the most trustworthy (Google, 2019a). This also happens with topics that are trending or popular at the time of the search (Brisson-Boivin & McAleese, 2021). The most ‘usable’ sites, as categorized by the algorithm, are also driven to the top of searches. Websites that are easy to use or that display well on a variety of browsers are more likely to be featured higher in a search. So, too, are websites that load quickly (Smith, 2015). Does popularity, usability, functionality or newness make something more relevant?

Endless Advertising. Endless Revenue

You may have noticed that ads are always at the top of your search results. Google Ads (n.d.) allows companies to pay for the top spot. These companies are charged each time a searcher clicks on the link and navigates to the company’s website. Companies get to pick which keyword searches their ad will appear on (Google Ads, n.d.). So, a company that does kitchen renovations might pay to have a link to their website pushed to the top every time an individual searches the word “fridge” in their geographic area. Perhaps the user is searching for a new fridge or a fridge accessory but based on their keywords, the renovation company will appear as the top result, even if the searcher is not looking for a renovation company.

Another determining factor in a Google search is location. As a person searches, they will notice advertising for local businesses. Although this is useful when looking for a place to eat, it might interrupt or stall other research activities (Smith, 2015). Past searches also impact future searches. A person who uses a Google account regularly, especially someone who doesn’t clear their cookies and browser history, often will notice products that reflect past searches appearing in advertising everywhere they go in the online environment. Search engine algorithms track spending habits and purchases, then adjust results and advertisements accordingly (Google, 2019a).

Manipulative Coding

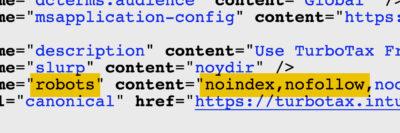

Just as a company can pay to have their website appear at the top of a search engine, so can they write code so that their website does not appear in a results list. Why would a company not want to appear in a results list? Sometimes, coding is added to stop the public from accessing internal or private company websites. In other cases, it’s to suppress sites that will take potential profits away. In 2019, the Federal Trade Commission in the United States sued the income tax company Intuit Turbotax for manipulating code to avoid profit loss (Elliott, 2019). The company had written the html code on a specific site to hide a ‘file for free’ option. At the same time, they coded the site’s paid version to appear in the Google search results. So people who searched for a site to file taxes and who may have been eligible for the US Government Free File Deal were driven to a paid site instead, and many did pay–as they were unable to see the free option. Turbotax and its smaller counterpart H & R Block have been called out publicly for using the coding “noindex=nofollow” on these pages (Elliott, 2019). Months after the publication of the news story referenced, TurboTax removed the code.

3.2.3 Filter Bubbles

Filter bubbles, also known as preference bubbles, alter the way people encounter ideas, and impact how they view and understand information. As a user searches, algorithms keep track of the links they click, then continuously deliver similar content to that person (Brisson-Boivin & McAleese, 2021). This creates a list of search results that is personalized and may contain websites that people with different interests would not see (Zimmer et al., 2019; Brisson-Boivin & McAleese, 2021). This has two outcomes; while the algorithms are selecting and displaying results that will appeal to each individual user, they are also cutting the user off from information the algorithm has selected as not appealing to the user (GCFLearnFree.org, 2018; Brisson-Boivin & McAleese, 2021). If an individual is only exposed to one side of a story, they may believe it to be true and become overconfident in their opinion, and close-minded about other perspectives. This video about How Filter Bubbles Isolate You describes the issue very well.

To understand how a filter bubble may work, we will look at the varying opinions about the issue of climate change. Thousands of online articles discuss the fact that humanity is quickly warming the globe to dangerous levels that will become unlivable. While at the same time, thousands of online articles argue that climate change is a myth being spread to manipulate people into behaving a certain way. Depending on a person’s search behavior, they are likely to encounter one or the other. A person who is an avid gardener often searching for ways to live sustainability, will likely see the articles that maintain that climate change is an issue. An individual who is suspicious of the government, and commonly shares posts that are critical of government decisions related to things like carbon taxes, is more likely to come across articles critical of climate change. As an individual selects the article, on either side, they will continue to see articles that confirm what they previously clicked on. This can lead a user down rabbit holes as they are continuously fed more and more articles on the same topic, often with content increasing in extremity (Saurwein & Spencer-Smith, 2021; Hall, 2022).

The biggest issue with all of this is that although human beings create algorithms, those who are engaging with them by using the Internet do not consent to the filtering and censoring of their search results. Since algorithms are embedded into the entire web experience, it is very difficult to avoid being placed in a filter bubble, even if it is partially of our own creation.

There are some ways to seek out varied sources and perspectives, try:

- Following accounts or friends who have different, even disagreeable opinions.

- Clicking on articles that are both personally agreeable and disagreeable to get more balance (Zimmer et al., 2019).

- Clearing search history and cookies.

- Using a search tool that does not collect data.

- DuckDuckGo is a search engine that claims to protect privacy and avoid the creation of filter bubbles.

In relation to searching, an algorithm is a set of instructions that helps a searcher find an answer to a question.

A filter bubble occurs when a searcher is continuously exposed to similar types of content and information - placing them into a virtual bubble.

A phenomenon that occurs when an individual discovers one source of online information and then continues clicking through threaded information within the original article, video, or content. As one clicks through to different linked content, they may discover the content becoming more and more extreme or bizarre.